That’s Their Voice. But It’s Not Them.

of audio needed to clone a voice

of people can’t distinguish cloned voices

to verify with Certifyd

The reality of voice cloning in the UK.

Voice cloning protection with Certifyd eliminates the voice channel as a trusted identity signal. AI voice cloning can now replicate any person’s voice from a few seconds of recorded audio — enough to fool colleagues, family members, and bank voice-recognition systems. Certifyd replaces voice-based trust with device-bound cryptographic authentication. When identity matters, the person verifies through their registered device, not through their voice. A cloned voice cannot complete a passkey challenge.

Modern voice cloning requires as little as three seconds of audio to create a convincing replica of someone’s voice. Public figures, executives, and anyone with a social media presence or podcast appearance has enough recorded audio online for their voice to be cloned. Research shows that 85% of people cannot reliably distinguish a cloned voice from the real thing.

Voice has historically been treated as a trust signal. We recognise colleagues, family members, and business contacts by their voice. Phone calls are considered more trustworthy than emails because ‘I spoke to them directly.’ Banks use voice recognition for authentication. All of these trust assumptions are now compromised. A criminal with a £100 voice cloning tool and three seconds of a CEO’s podcast appearance can generate any instruction in that CEO’s voice.

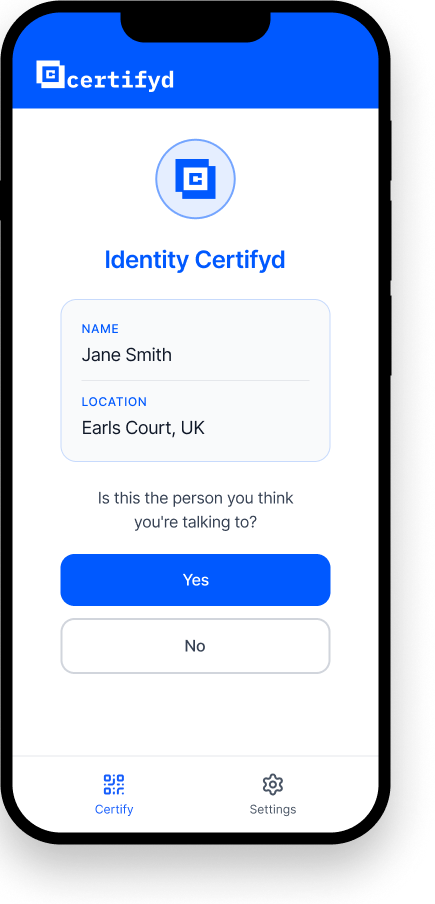

Certifyd solves this by removing voice from the trust equation entirely. Identity is verified through a device-bound cryptographic passkey, not through auditory recognition. When someone receives a suspicious call, they can request the caller to verify through Certifyd — a 30-second process that the real person can complete and an impersonator cannot. This transforms voice from a trusted channel into what it actually is: an easily spoofed medium.

This is broken.

Here's why.

Voice cloning requires only seconds of audio — any public figure or executive is a potential target.

Most people cannot distinguish cloned voices from real ones, including trained professionals.

Voice has historically been treated as a trust signal for identity — that assumption is now broken.

Bank voice recognition, phone-based authorisations, and verbal instructions are all vulnerable.

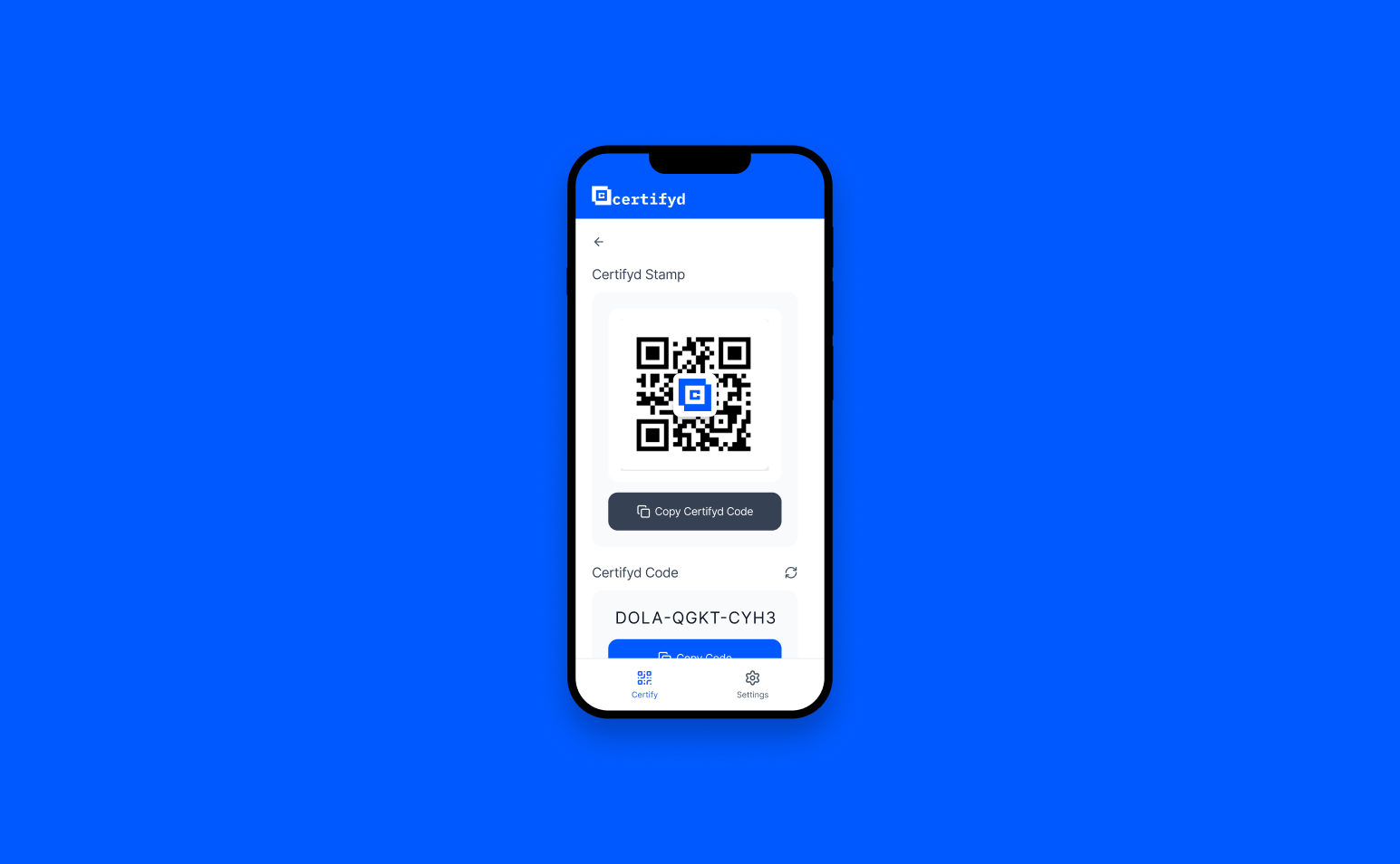

Simple verification.

Every time.

Suspicious call or voice message is received from a supposed known person

Recipient requests the caller to verify through Certifyd — no voice analysis needed

The caller must complete a device-bound passkey challenge on their registered phone

If they can’t complete it, the call is flagged as a potential impersonation — regardless of how convincing the voice sounds

Ready to see it in action?

Book a demo or tell us about your needs.

“They created a voice model of his voice from public recordings. It sounded a bit like him, but knowing him well enough, I knew it wasn’t him. Most people wouldn’t.”— Cybersecurity professional, 20 years experience

Common questions.

Modern voice cloning uses AI to analyse a sample of someone’s voice (as little as 3 seconds) and create a model that can generate any text in that voice. The output is convincing enough to fool family members, colleagues, and even voice-recognition software. Tools are commercially available for under £100, and open-source alternatives are free. Anyone with a recorded voice — from a podcast, social media, or conference talk — is a potential target.

Voice deepfake detection faces the same arms race as visual deepfake detection: every improvement in detection trains the next generation of cloning tools to evade it. Detection accuracy varies widely, and real-time detection during a live call is even less reliable. Certifyd takes a fundamentally different approach — it doesn’t try to analyse the voice at all. It verifies the person through their physical device, making the voice irrelevant to the identity proof.

Yes. Voice cloning is no longer limited to state actors or sophisticated criminals. Consumer-grade tools are widely available, and the barrier to entry is a few seconds of audio. Any business where verbal instructions trigger actions — payment authorisations, access grants, information sharing — is at risk. The Arup deepfake attack, which combined voice and video cloning, cost over £20 million. Smaller businesses have been targeted for five and six-figure sums.

Certifyd doesn’t replace phone calls — it adds a verification step alongside them. When a call’s authenticity matters, the recipient can ask the caller to verify through Certifyd (a quick passkey authentication on their phone). This can happen during the call or immediately after. The verification record confirms the caller’s identity cryptographically, regardless of what their voice sounded like.

Explore more use cases.

Related Solutions

External Resources

Verify identity beyond what voices can prove

Book a demo to see how Certifyd works for your team, or tell us about your verification needs and we'll get back to you within 24 hours.

Read: The Voice Cloning Threat